Building a Docker cluster with Nomad, Consul and SaltStack on TransIP

Part 1 of Avoiding the Cloud series: An in-depth series on how to deploy a Docker cluster based on Nomad, Consul, SaltStack and GlusterFS to a non-standard provider like TransIP

This set of articles dives into setting up a new Docker cluster in a repeatable way in the form of Cluster as Code. That means that we want to be able to check out one or more repositories and restore a similar cluster without any (or minimal) manual steps. Everything should be in code, scripts and configs inside some git repository.

It takes quite a bit of steps to get up and running, but after that it's easy-peasy. I've tried to be as complete as possible and include all the required code and steps for you to set up your own Docker cluster using SaltStack, Nomad and Consul on any provider where you can provision a VPS with an Ubuntu 20.04 images. If anything is missing or unclear, just comment down below and I'll try and help.

In this article we'll use TransIP as the provider as I'm familiar with them. But only the VPS provisioning step really depends on their API and can be easily replaced by another provider-specific process. If your favorite provider supports Cloudinit, like TransIP, it makes life even easier.

Now on to the description of what we are trying to do.

Technology Picks

Container Scheduling

Our container platform will be based on Docker. Before starting on this setup I did not have any real-life experience or background with orchestration tools, besides docker-compose for a smaller containerized development environment. For a cluster that supports redundancy, it's not complete enough.

The three tools I found to be recommended to use for container orchestration were Docker Swarm, Kubernetes and Nomad. After some research I decided against using Kubernetes and Docker Swarm, and picked HashiCorp's Nomad. It just seems more developer-friendly.

If you use Nomad, you should use HashiCorp's Consul as well for service discovery and live configuration sharing. HashiCorp is known for their developer-friendly mindset.

It's important to note that both are easy to use in a Cluster as Code environment.

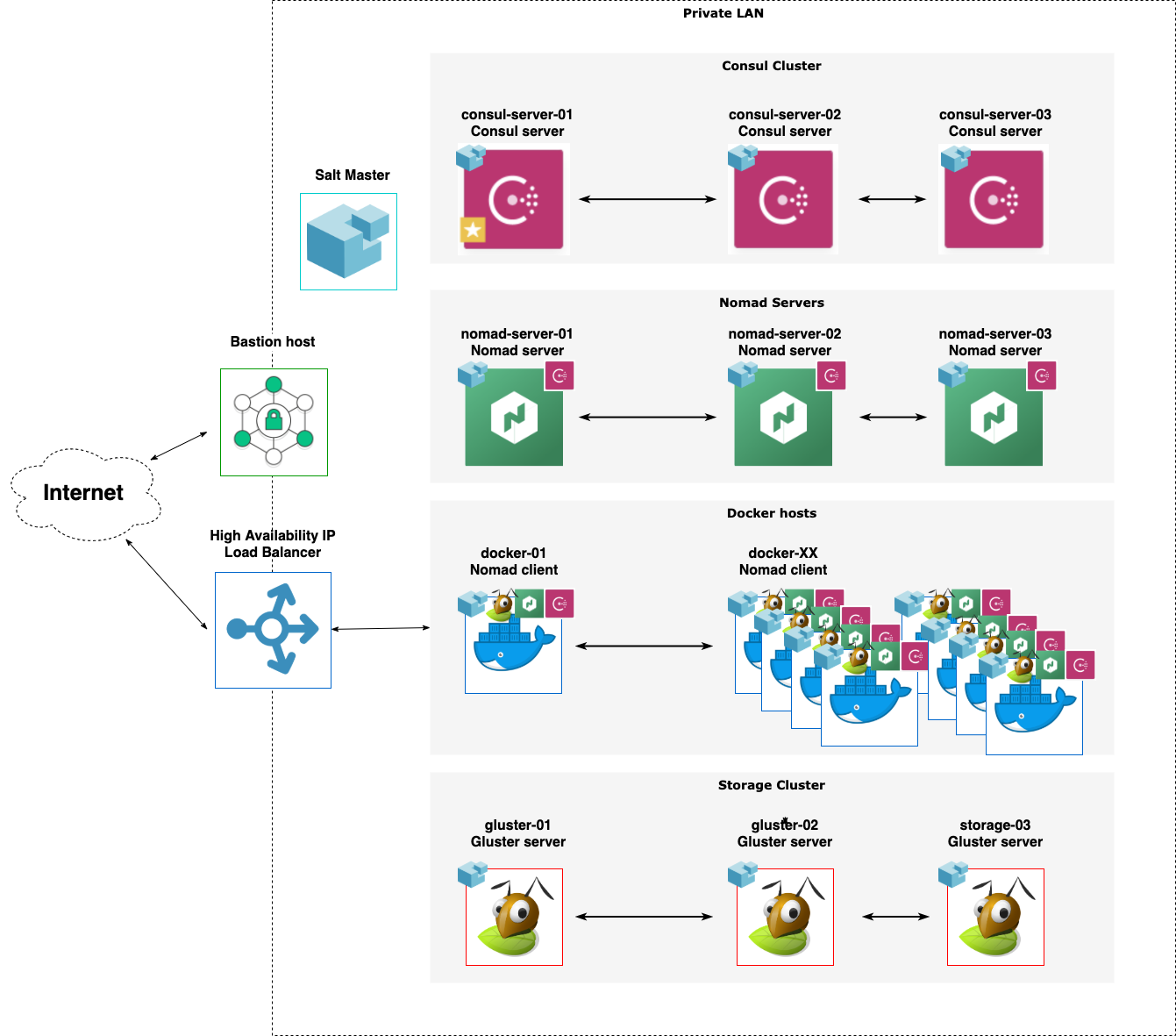

For a redundant cluster, the recommended setup is that you build a Consul cluster of at least 3 Consul servers. The reference architecture for Nomad tells us that we should have at least 3 Nomad servers.

All Nomad servers and Nomad clients need to have a Consul client as well.

Configuration Management

Of course we don't want to manually configure all the different nodes. There are at least 4 really different types of nodes in our cluster:

- Consul servers

- Nomad servers

- Nomad clients / Docker hosts

- GlusterFS servers

Each one requires their own packages, configuration and firewall settings. That's not a task to do by hand.

There are a number of tools to centralize this configuration management. Most well-known are Puppet, Ansible, Chef and SaltStack. Again, I had no history with any of them, and no bias. After checking the documentation for all four, I've decided that SaltStack fit my way of thinking and working best. Being based on Python for customizations helped as well.

SaltStack consists of a central Salt Master that communicates and delegates tasks to Salt Minions. Each node in the network should therefore have a Salt Minion installed. As a Salt Minion is required to start configuring each node, ideally it should automatically be installed on each VPS as its provisioned into our cluster.

Image provisioning

Ideally using Nomad and Consul would also mean using HashiCorp's Terraform to provision the infrastructure. Terraform is advised a lot for provisioning infrastructure as code. But for this setup there were compelling reasons to use the provider TransIP. And although there is a Terraform Provider for TransIP, it does not really support real-world use cases. For instance it does not allow re-installation of a node. So that means Terraform was off the table.

Luckily TransIP does have a very well documented API to interact with your account services and it supports Ubuntu's Cloudinit to bootstrap the installation and install a Salt Minion as well. This gives us the opportunity to still do the provisioning in a fully scripted way, supporting our Cluster as Code environment.

Network filesystem

Every cluster needs to have a network-based filesystem that allows different nodes access to persistent file storage. That allows the orchestrator to move tasks between nodes and to make sure that those tasks still have access to the same persistent volume they were using before. Especially for databases a very important requirement.

Based on the choices already made, GlusterFS seems like the best choice.

Our Cluster Stack

So the full cluster implementation consists of:

- Ubuntu Cloudinit to initially provision identical images with TransIP

- SaltStack to configure images for their purpose

- Consul for Service Discovery and Cluster configuration data

- Nomad for cluster and application scheduling

- GlusterFS for scalable network filesystem

None of these nodes need to be reachable from the internet. TransIP offers a Private Network option where you can link VPSs together. None of the traffic running over the private network counts towards your network traffic limits.

Only the nomad clients (which actually run our web services) need to be exposed via a load balancer. TransIP offers a High Availability IP (HAIP), which is practically a hosted Load Balancer with minimal configuration options. So while it can provide access to the web services we choose to expose, it's not usable to access your nodes for administration purposes. For that I suggest using something like a bastion host as a single access point into the private Network.

Practically that means our cluster looks something like this:

IP Layout

For practical reasons we'll use a fixed IP numbering scheme in our private Network.

In this setup we'll use the following layout:

| Type | Expected amount | Naming convention | IP layout |

|---|---|---|---|

| Bastion Host | 1 | bastion | 192.168.0.1 |

| Salt Master | 1 | salt-master | 192.168.0.2 |

| Consul Cluster | 1 - 5 | consul-server-XX | 192.168.0.10 - 192.168.0.14 |

| Nomad Servers | 1 - 5 | nomad-server-XX | 192.168.0.20 - 192.168.0.24 |

| Docker Hosts | 1 - 70 | docker-XX | 192.168.0.30 - 192.168.0.99 |

| Storage Nodes | 1 - 50 | gluster-XX | 192.168.0.100 - 192.168.0.149 |

All host numbering starts at 01 for the first of its type. So the first Consul server will be consul-server-01, the first Nomad server will bet nomad-server-01, etc.

Roles

Each of the types of nodes we use will have different roles for the different systems:

| Type | Salt Role | Consul Role | Nomad Role | Gluster Role |

|---|---|---|---|---|

| Bastion Host | ||||

| Salt Master | Master | |||

| Consul Cluster | Minion | Server | ||

| Nomad Servers | Minion | Client | Server | |

| Docker Hosts | Minion | Client | Client | Client |

| Storage Nodes | Minion | Server |

Conclusion

At this point we have determined which software product we are going to use for our Cluster as Code. Our next step is to set up our VPS images in Part 2: Reproducibly provisioning Salt Minions on TransIP.